Category Archives: Jitter Tutorials

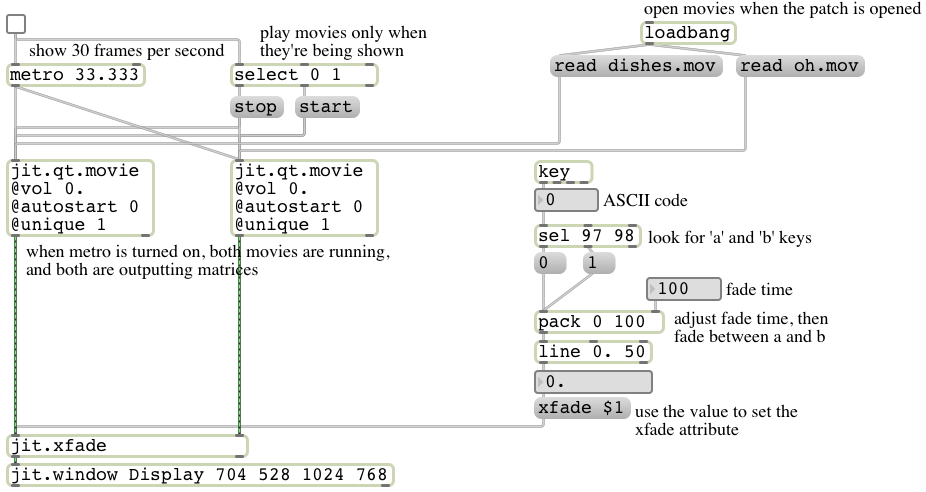

A-B video crossfade

Image

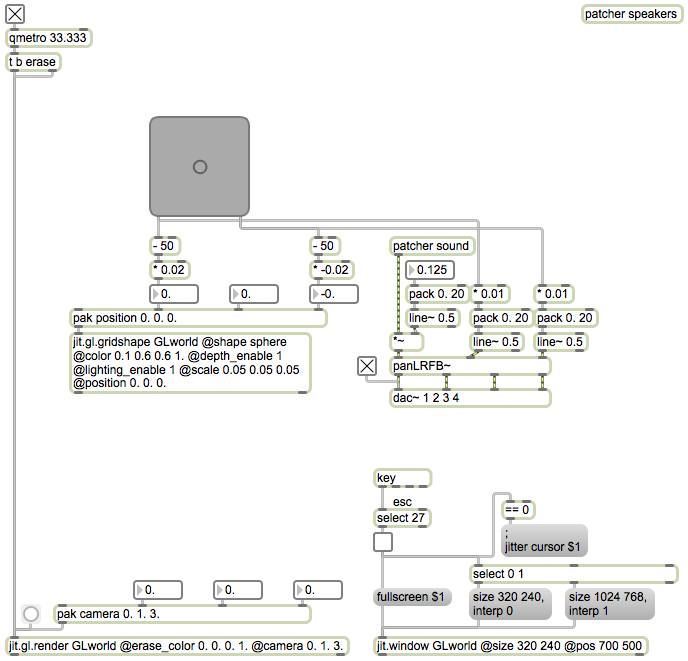

Quadraphonic panning with mouse control and Open GL visualization

Image

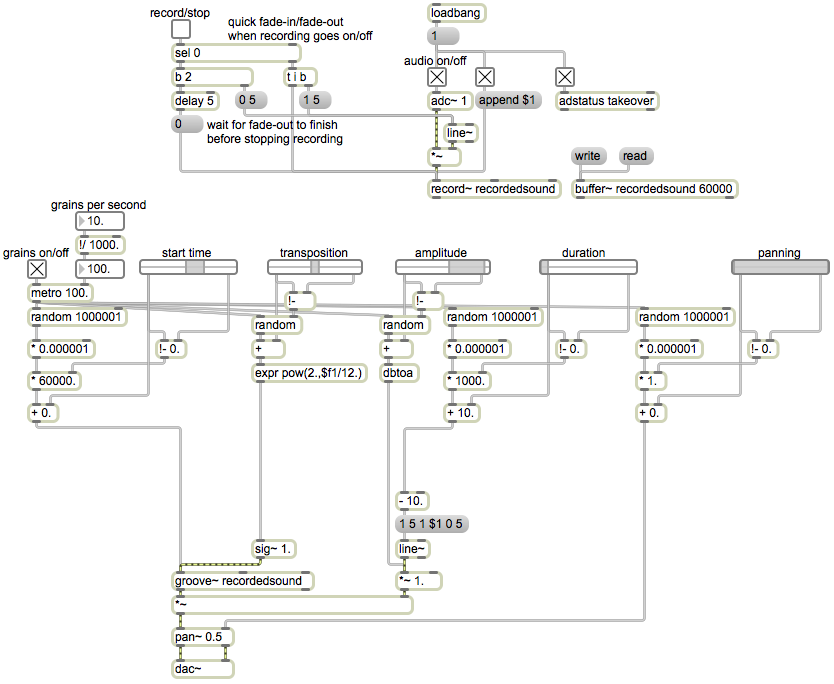

Single stream of grains from a buffer~

Image

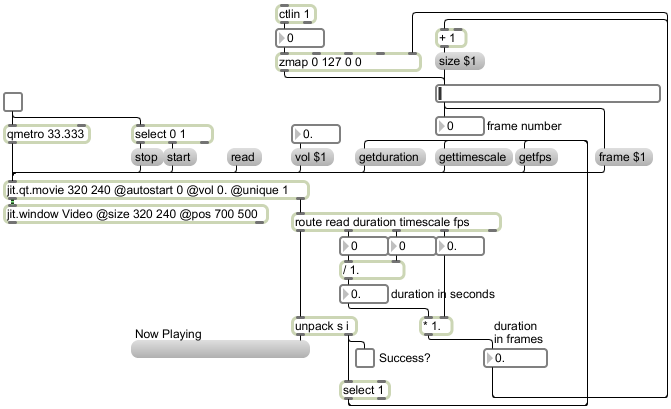

Attributes of jit.qt.movie

Image

The jit.qt.movie object, for playing QuickTime videos, enacts many of the features made available by QuickTime, so it has a great many attributes and understands a great many messages. You can set some of its attributes with messages, such as its playback rate (with a rate message) or its audio volume (with a vol message). Some of its attributes are traits of the loaded movie file, and can’t be altered (at least not without altering the contents of the movie file itself), such as its duration (the duration attribute). This patch shows how you can query jit.qt.movie for the state of its attributes, use that information to derive other information, and then use messages to telljit.qt.movie what to do, such as using a frame message to tell jit.qt.movie what frame of the video to go to.

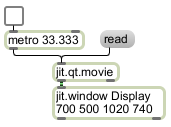

Play a QuickTime movie

Image

This shows a simple way to play a QuickTime movie using Jitter. When the jit.qt.movie object receives a read message, it opens a dialog box allowing you to select a movie file. As soon as you select a movie and click OK,jit.qt.movie begins to retrieve frames of video from that file and place them in memory, at the frames-per-second rate specified in the movie file itself. (It also begins playing the audio soundtrack of the video.) But for you to see the video, you need to create a window in which to display it, and you need to tell that window what to display. The jit.window object creates a window, with whatever name you give it in the first argument, at the location you specify in the next four arguments (the pixel offset coordinates of the left, top, right, and bottom edges of your window). Then, each time jit.qt.movie receives a bang it sends out information about the location of the image in memory, which the jit.window will refer to and draw. Because we expect that a video might have a frame rate as high as 30 frames per second, we use a metro to send a bang 30 times per second, i.e., every 33.333 ms. So,jit.qt.movie takes care of grabbing each frame of the video and putting it in memory, metro triggers it 30 times per second to convey that memory location to jit.window, and jit.window refers to that memory location and draws its contents.

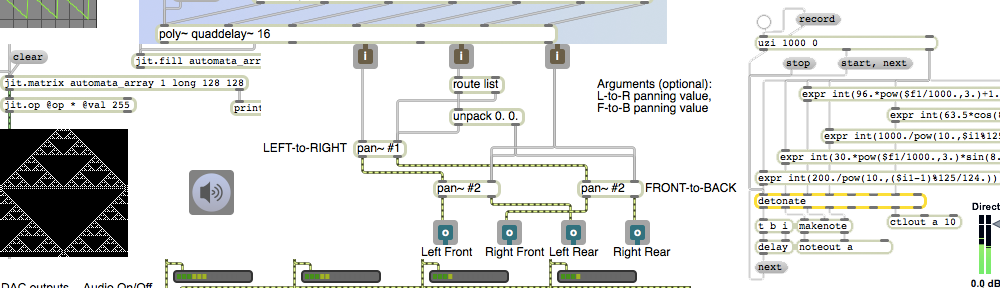

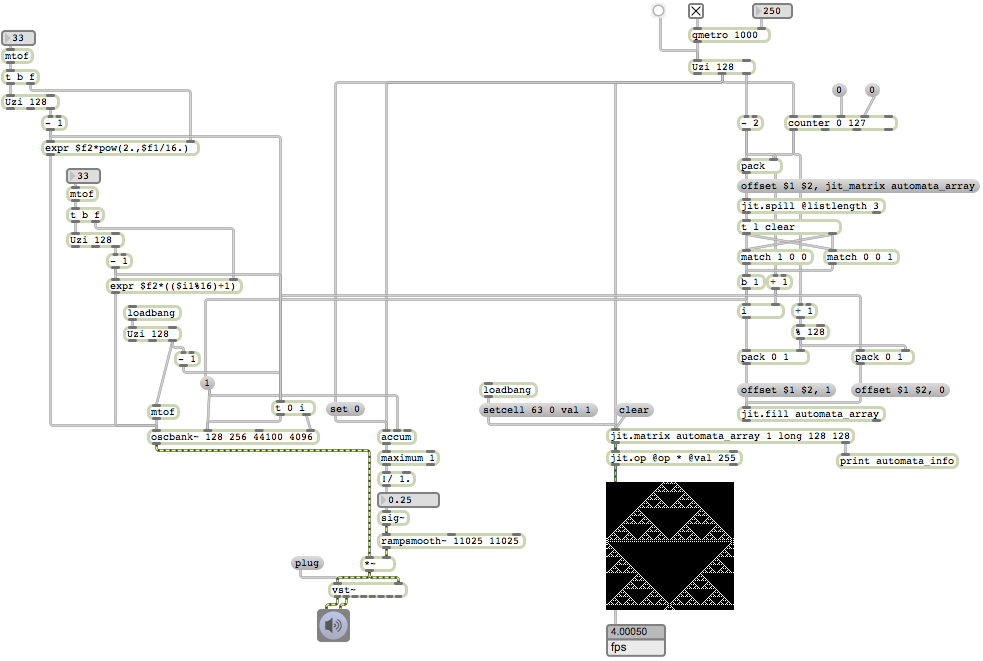

Oscillators controlled by cellular automata

Image

Relevant to the discussion of musical applications of cellular automata, here is a patch that controls a bank of 128 oscillators based on the on-off state of a row of cells in a Jitter matrix that is generated by some very simple rules of a cellular automaton.

I won’t try to explain every detail of this patch, but will point to a few main features. The interval of the qmetro will determine how frequently the program reads a row of on-off states (1s or 0s) from a matrix, uses them to turn on or off oscillators in a oscbank~ object, and generates a new row of on-off states according to some simple rules (which are then visualized in the jit.pwindow object). The number boxes on the left are to choose the fundamental pitch of the oscillators. The number box on the top left produces 8 octaves of frequencies of a 16-notes-per-octave equal-tempered scale; the other number box tunes the oscillators to 16 harmonics of the fundamental pitch. The amplitude is adjusted in inverse proportion to the number of oscillators being played. If so desired, one can load a VST plug-in and run the sound through that for added processing.

Stop-action slideshow (backward)

Image

Here’s an example of algorithmic video editing in Jitter. The goal is to create a stop action slideshow of single frames of video, stepping through a video in 1-second increments. And just to make it a bit weirder and more challenging, we’ll step through the movie backward, from end to beginning.

Some information we might need to know in order to do this properly is the frame rate (frames per second) of the video so that we know how many frames to move ahead or backward in order to move one second’s worth of time. We might also want to know the total number of frames in the video, so we know where to begin and end. Finally, we need to make a decision about how quickly we want to step through the “slideshow”. In this example, by default we step through it one second at a time, but we make this rate adjustable with a number box.

When you read in a movie (it should be at least several seconds long, since we’re going to be leaping through it in 1-second increments) jit.qt.movie sends out a ‘read’ message reporting whether it read the movie file successfully. If it did, we request information about the frames per second (fps) and number of frames (framecount), information that’s contained in the movie file itself, and that jit.qt.movie can provide for us. We use that information to calculate the duration of the movie in seconds (framecount/fps), and to set the step size and limit of our stepping calculations.

The slideshow step calculator works like this. Starting at frame 0, use the + object to add one second’s worth of frames to move ahead one second, use the % (modulo) object to keep the numbers within the range of total frames in the video (% is most useful for keeping numbers cycling within a given range), and then (because we actually want to be moving backward rather than forward) we subtract the frame number from the total number of frames in the !- object. (You could omit that object if you wanted your slideshow to move forward.) We send that resulting frame number to jit.qt.movie as part of a ‘frame’ message to cause it to go to that frame, and then we send a bang right after that to cause it to send its matrix information to jit.window. Also, we send the original number (before the !- object) to a pipe object to delay it a certain number of milliseconds, then it goes right back into the i object at the top to start the process over. (This will go on endlessly until pipe receives a ‘stop’ message that causes it to cancel its scheduled next output.)

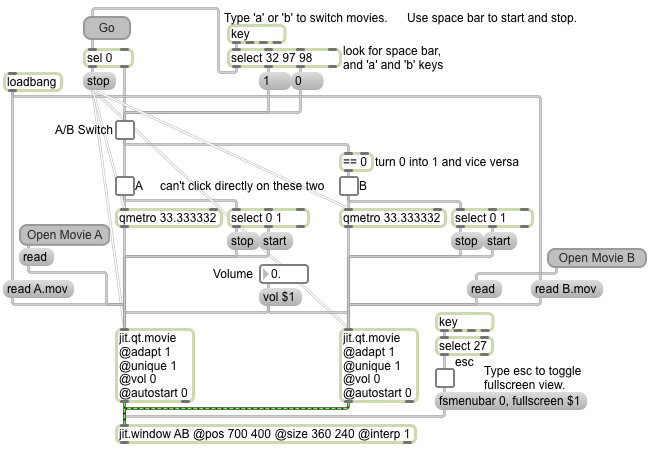

A-B video switcher

Image

This is a more refined sort of A-B video switcher patch than the one shown in the “Simplest possible A-B video switcher” example. It has a considerably more sophisticated user interface when viewed in Presentation mode. It will try to open two video files called “A.mov” and “B.mov”, and it also provides buttons for the user to open any other videos to use as the A and B rolls.

Another possibly significant difference is that in this example we stop the movie that is not being viewed, and then when we switch back to that movie we restart it from the place it left off. In the previous example both movies were running all the time. If you need for the two movies always to be synchronized, you can either leave them both running as in the previous example, OR, whenever you stop one jit.qt.movie object, you can get its current ‘time’ attribute value and use that value to set the ‘time’ attribute of the other jit.qt.movie object when you start that one. (That’s not demonstrated in this example.)

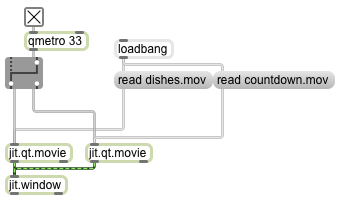

Simplest possible A-B video switcher

Image

This is the simplest possible way to switch between two videos. When the patch is opened we read in a couple of example video clips (which reside in ./patches/media/ within the Max application folder, so they should normally be in the file search path). Because the default state of the ‘autostart’ attribute in jit.qt.movie is 1, the movies start playing immediately. Because the default state of the ‘loop’ attribute in jit.qt.movie is 1, the movies will loop continually. Click on the toggle to start the qmetro; the bangs from the qmetro will go out one outlet of the ggate (the outlet being pointed to by the graphic), and will thus go to one of the two jit.qt.movie objects and display that movie. Click on the ggate to toggle its output to the other outlet; now the bangs from the qmetro are routed to the other jit.qt.movie object, so we see that movie instead. (Note: You can also send a bang in the left inet of ggate to toggle it back and forth, or you can send it the messages 0 and 1 to specify left or right outlet.)

If you don’t know the difference between a metro object and a qmetro object, you can read about it in this page on “Event Priority in Max (Scheduler vs. Queue)”. In short, qmetro is exactly like metro except that it runs at a lower level of priority in Max’s scheduler; when Max’s “Overdrive” option is checked, Max will prioritize timing events triggered by metro over those triggered by qmetro. Since the precise timing of a video frame’s appearance is less crucial than the precise timing of an audio or musical event (we don’t notice usually when a frame of video appears a fraction of a second late or even occasionally is missing altogether, but we do notice when audio drops out or when a musical event is late), it makes sense to trigger video display with the low-priority scheduler most of the time.

.png)