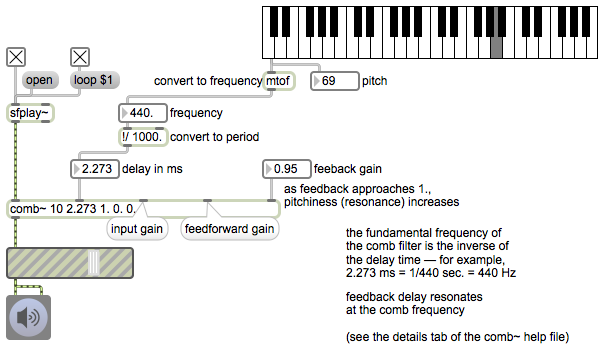

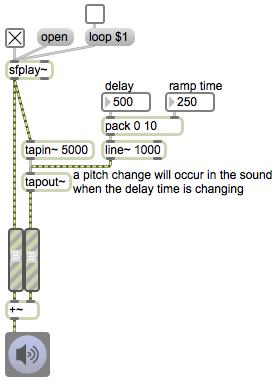

This patch demonstrates how to adjust the delay time of a comb filter to make the filter correspond to a desired fundamental pitch.

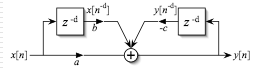

The filtering formula used by the comb~ object is

y[n] = a x[n] + b x[n-(DR/1000)] + c y[n-(DR/1000)]

wherein R is the sampling rate, D is a delay time in milliseconds, x[n] is the current input sample, y[n] is the current output sample, and a, b, and c are gain scaling factors.

That formula can be shown diagrammatically like this.

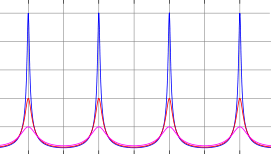

In the patch we convert a MIDI-based pitch number into a frequency in Hertz, then use that to calculate the correct delay time for the filter. Using delay feedback (a past y[n] value) with feedback gain approaching 1 creates strong resonance at the comb frequency, yielding an inverted comb response pattern sort of like this,

resulting in a strong imposition of the fundamental pitch and a buzzy timbre.