Functional Anatomy of Language

We have been working toward the development of a new model of the functional anatomy of language. The model is largely based on insights from the cortical organization vision, particularly the recent characterizations of the ventral and dorsal processing streams, where the ventral stream supports visual recognition and the dorsal stream supports visuomotor integration (e.g., as in visually guided reaching).

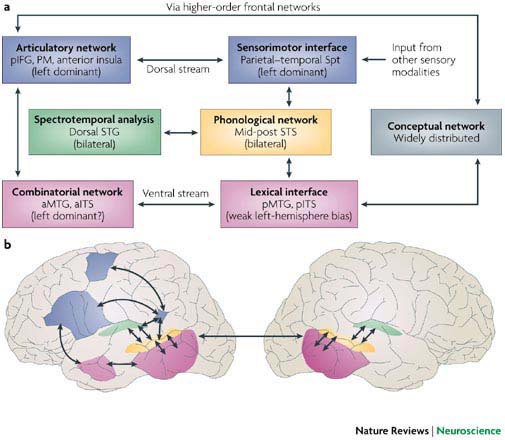

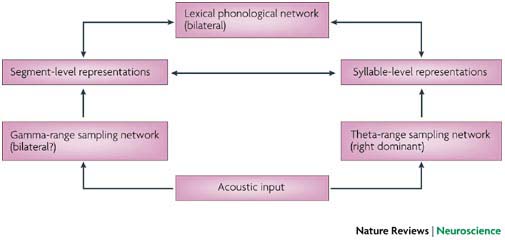

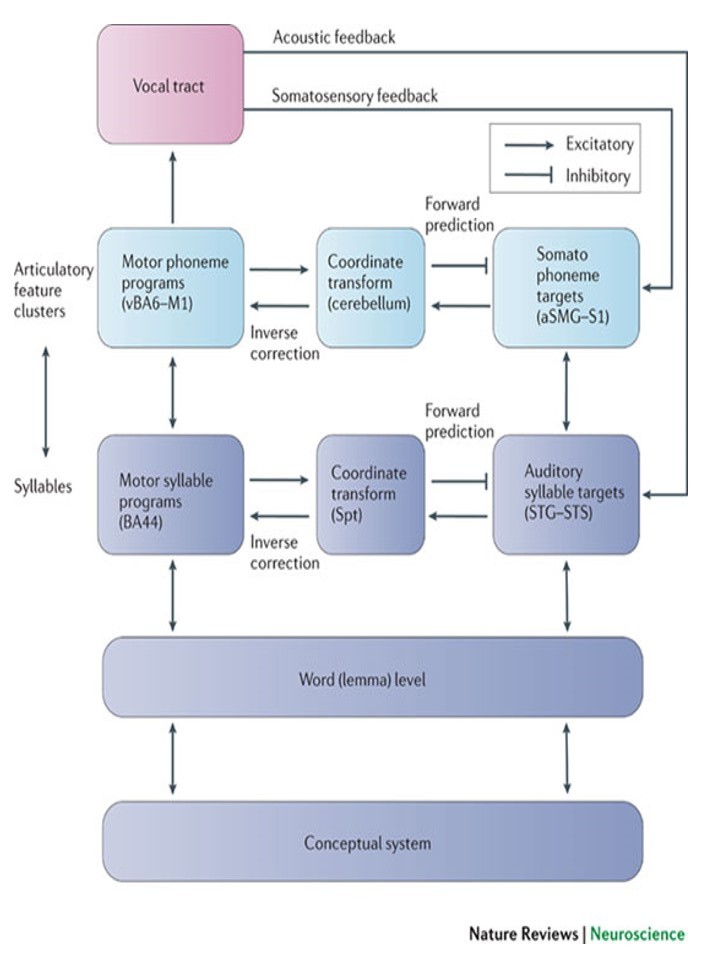

The following is an overview of the model. Early cortical stages of speech perception involve auditory fields in the STG bilaterally. This cortical processing system then diverges into two processing streams, a ventral stream, which is involved in mapping sound onto meaning, and a dorsal stream, which is involved in mapping sound onto articulatory-based representations. The dorsal stream is fairly strongly left-lateralized; the ventral stream also appears to be left dominant, but perhaps to a lesser degree. The ventral stream projects ventro-laterally and involves cortex in the superior temporal sulcus and ultimately in the temporal-parietal-occipital (T-P-O) junction. T-P-O junction structures serve as an interface between sound-based representations of speech in superior temporal gyri (bilaterally) and widely distributed conceptual representations. In psycholinguistic terms, this sound-meaning interface system corresponds to the lemma level of representation. The dorsal stream projects dorso-posteriorly toward the parietal lobe and ultimately to frontal regions. This dorsal circuit provides a mechanism for the development and maintenance of “parity” between auditory and motor representations of speech. Although the proposed dorsal stream represents a very tight connection between processes involved in speech perception and speech production, it does not appear to be a critical component of the speech perception process under normal (ecologically natural) listening conditions, that is when speech input is mapped onto a conceptual representation. Some degree of bi-directionality is also proposed such that, for example, sound-based representations of speech play a role not only in speech perception, but also in speech production. This model can account for a fairly wide range of data including recent functional imaging results and classic aphasia symptom complexes. It also fits naturally with current thinking about the organization of perceptual and sensorimotor systems (i.e., the model treats language as a sub-component of a larger organizational scheme), and further, provides a natural account the neural organization of verbal working memory (a dorsal stream function). The figures below show the proposed model components and their topographic locations.

This model as initially proposed in Hickok & Poeppel (2000) and in Hickok (2000b), was developed primarily on the basis of existing data. Much of the empirical work in our lab has been focused on testing various predictions of the model, as well as refining its details.

Bilateral Organization of Speech Perception.

The model predicts that either hemisphere should have the capacity to construct representations of speech input that are sufficient to allow access to the mental lexicon. This prediction was tested in a group of left hemisphere damaged aphasics and one split brain patient using a simple auditory word-to-picture matching paradigm. Based on previous findings from the early 1980s (now largely ignored) we predicted that single word comprehension would be quite good overall in aphasia and in the isolated right hemisphere. We also predicted that when comprehension errors were committed they would tend to be semantic in nature (selecting a picture of a moose instead of the target bear) and would less often be phonemic in nature (selecting pear instead of bear). This prediction was confirmed both in the aphasic group and in the split brain patient (Barde et al. 2000, 2001). We are currently further testing this prediction using the intracarotid amobarbital (Wada) procedure.

Auditory cortex involvement in speech production.

The model predicts that left auditory cortical fields play a role not only in speech perception but also in speech production. This prediction was tested in a 4T fMRI experiment in which participants covertly named visually presented pictures (Hickok et al. 2000). That study showed activations in ventral posterior visual areas, and left inferior frontal areas as other studies have found. It also showed, in every subject, activation in the left superior temporal gyrus confirming that auditory-related fields are active during speech production. Existing neuropsychological and MEG data helped constrain the interpretation of our fMRI result (which itself tells little regarding the function or timing of the activation), and suggests that this activity reflects access to phonemic representations ultimately used to guide articulatory processes. Additional recent fMRI studies (Okada, Smith, Humphries, & Hickok (in press, 2003) have shown that this left STG region exhibits Òlength effectsÓ in naming (naming longer words leads to increased reaction times and greater brain activation), which further implicates this region in phonological level processes since length is a property of the phonological representation of a word. This line of work has implications for psycholinguistic theory where there is a long-standing debate over whether there are shared or independent input and output systems (Hickok, 2001).

Auditory-motor integration.

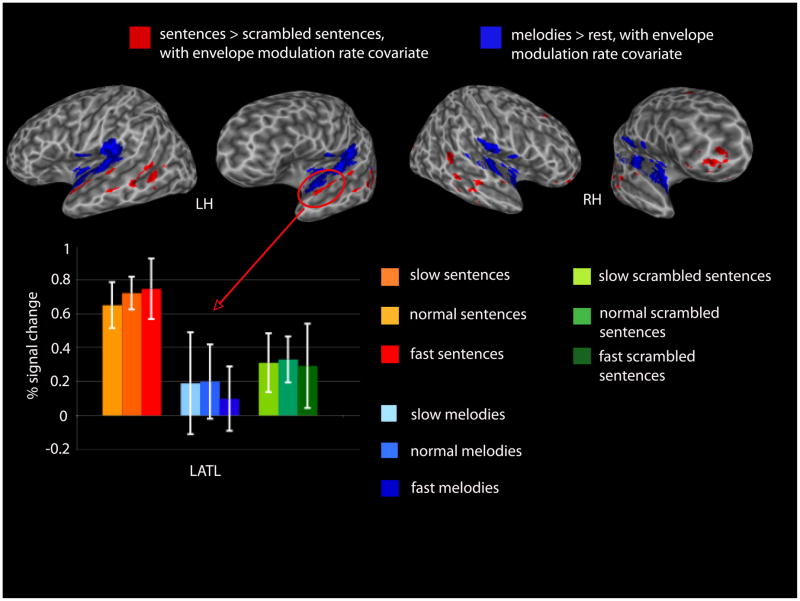

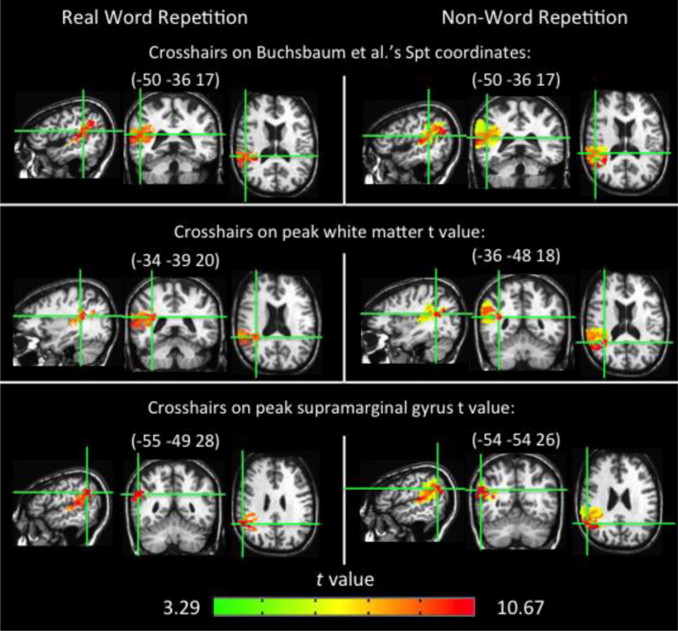

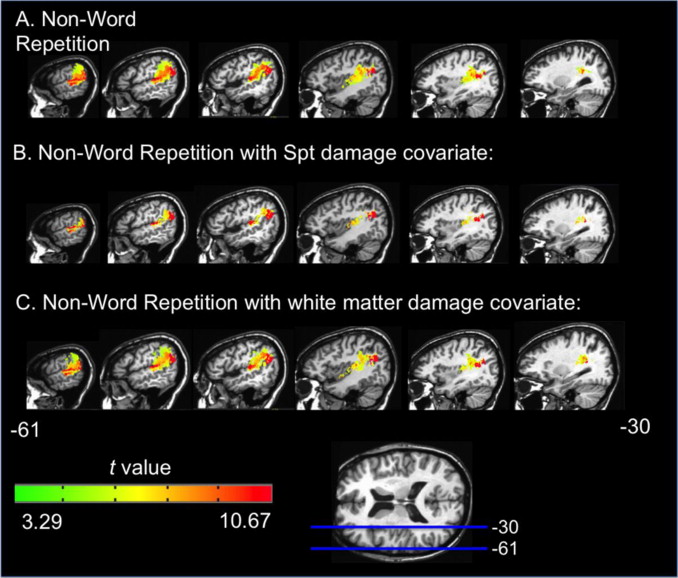

The model predicts the existence of an auditory-motor integration circuit which maps between auditory representations in the superior temporal gyrus and motor-articulatory codes in frontal cortex. Reasoning from vision work, we predicted an intermediary region in the inferior parietal lobe that would show both sensory and motor responses (single units in parietal visuomotor integration regions show such response properties). We tested this prediction using fMRI: participants listened to a set of three non-sense words and then silently rehearsed them for up to 27 seconds. We looked for regions which activated both to the perceptual, and “motor” phases of the trial. We found several regions with such properties in each participant, including the predicted site at the boundary of the parietal and temporal lobes, which we have termed Spt (Buchsbaum, et al. 2001). In that study, Spt showed a high degree of correlation with area 44 in Broca’s area (r > .95), suggesting a tight functional connection with frontal systems. Recent work has looked at the stimulus-specificity of this auditory-motor integration circuit, by contrasting speech stimuli with melodic stimuli (novel two-measure piano melodies). Results show that Spt activates equivalently when subjects listen to and then rehearse/hum speech or melodies (Hickok et al. 2003). This suggests a more domain-general auditory-motor integration network. Current fMRI studies are exploring (1) the effects of learning on the involvement of this network (activation should rely less on sensory representations, and hence the auditory-motor integration circuit, as the sequence becomes more familiar), and (2) the effects of mapping the auditory pattern onto non-oral motor effectors.

Extensions of the model.

The core of the model was developed to account for linguistic processes involving lexical or sublexical processes; it does not provide an account of higher level linguistic processes, such as syntactic mechanisms. Recent work in our lab has begun to address limited aspects of grammatical processing, and to explore how this aspect of language might fit in to the model. It has been proposed by several investigators, that the anterior temporal cortex (near auditory fields) plays a critical role in syntax processing. Our first efforts in this area have been simply to test several alternative hypotheses concerning the role of anterior temporal regions (Humphries, et al. 2001). More recently we have explored the effects of prosodic and syntactic structure on anterior temporal activation. This work suggests a functional parcellation of this brain region with dorsal portions responding both to syntactic and prosodic manipulations, and more ventral (MTG) portions responding only the presence of syntactic structure.

Sensorimotor Effects on Language Organization

Sign language provides a kind of natural experimental manipulation: it is a system which shares much of the linguistic complexity of spoken language, but uses different sensory and motor interfaces. Thus by comparing the functional anatomy of signed and spoken language, we can get a handle on the extent to which sensory and motor factors influence the organization of language in the brain. Neuropsychological work over the last several years has suggested (1) no difference in the gross lateralization pattern for language or spatial cognitive skills in Deaf signers vs. hearing individuals, (2) similar organization of motor-related language production systems in the left frontal lobe for signed vs. spoken language, (3) similar involvement of left temporal (not parietal) structures in language comprehension, and (4) possible radical differences in the sublexical coding of speech versus sign, with the former relying on dorsal superior temporal gyrus regions, and the later relying on ventral posterior (vision-related) cortices. Current work in this area is focusing on testing predictions made by the functional anatomic model for spoken language (described above). These predictions include the idea that there will be visual-related areas that participate both in perception and production, and that sign language perception systems will bifurcate into a ventral-temporal stream for comprehension, and a dorsal superior parietal stream for interfacing perception and production of sign language.